Enhancing Emotion Data Collection with Selenium Pt.2

László Zabb

July 15

•

2 minute read

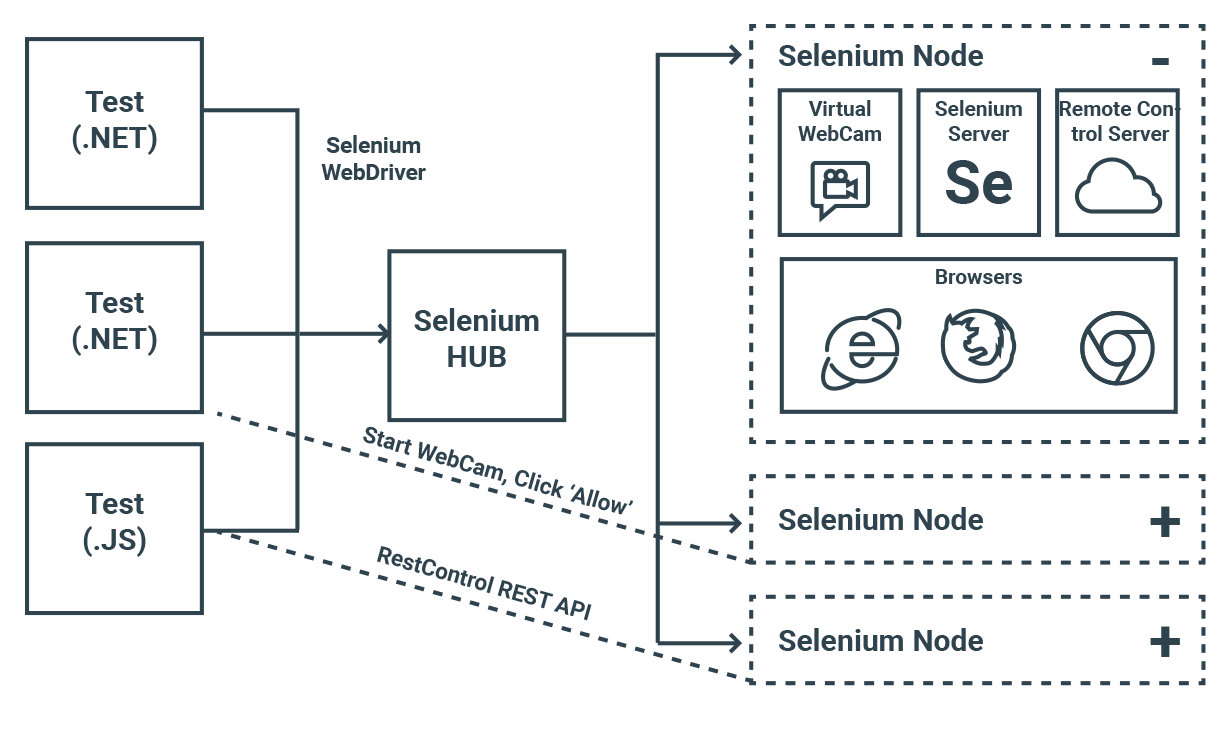

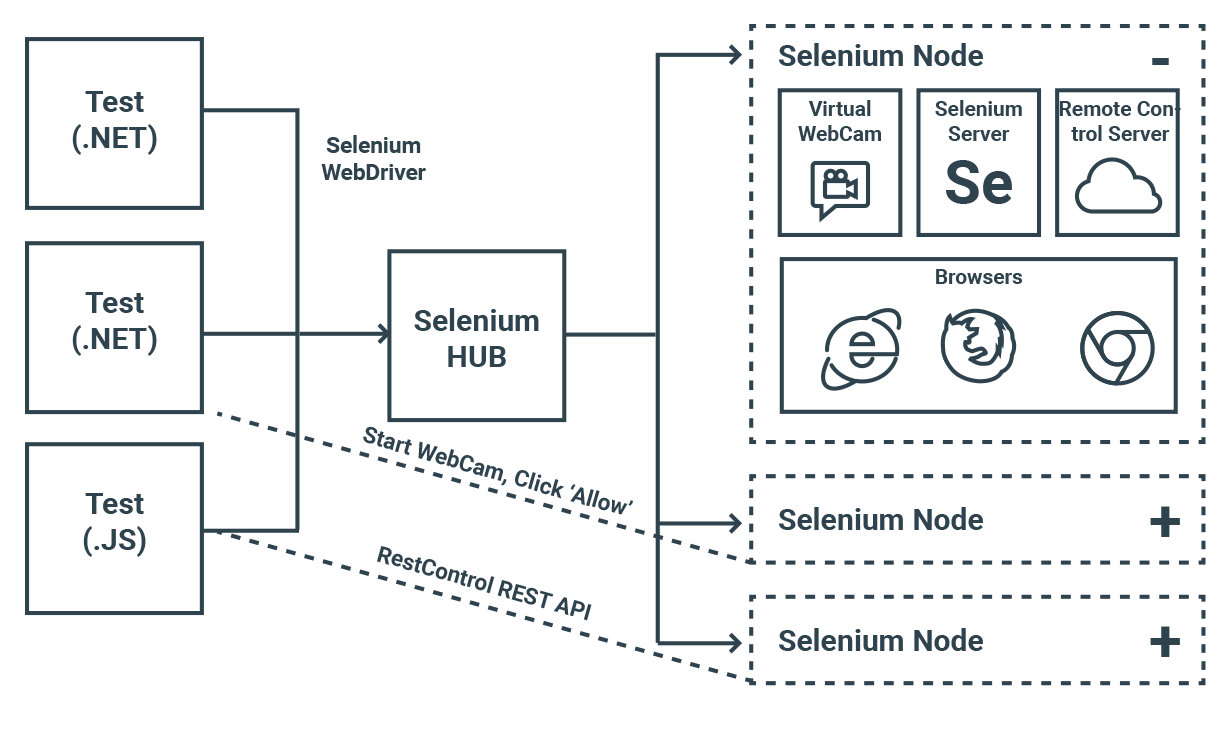

We ended up creating our own Selenium Grid, as providers like BrowserStack did not provide support for webcams and customizations with a function to Allow webcam with Flash. We did not aim to create a wide variety of home made environments, rather focusing on advantages of our own Grids and using external providers where possible.

The infrastructure we have is not black magic, most likely BrowserStack has similar building blocks behind the scene. We built a standard Selenium grid with one Hub and several preconfigured Nodes.

The custom part is that all nodes have a virtual webcam, a prepared short video as a fake input stream and our own RC Service.

The key feature of our solution is the RC Service, which allows to control the webcam and fill up the Selenium limitation gaps. It is a simple REST API server that we call RemoteControlService. The service has two important features. It can switch the WebCam on/off and control the mouse to click on the desired coordinates (Using WinAPI).

In a nutshell, when a test needs a webcam, it calls the API to launch the webcam, and when a test reaches the point where it should allow the webcam, it commands the API to click on the corresponding position. The latter action is repeated until successful. We wanted to measure how many times the user had to click on average per browser to be successful. In addition to these two main features, we added more functionality as we already had a custom API on the servers.

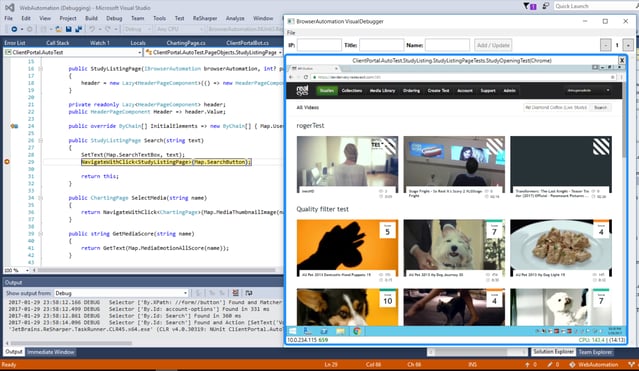

On top of the described infrastructure, we also built a framework based on two main principles. The first principle is it’s built in a way to support multiple providers (our local grid, BrowserStack, local machine execution) including new ones, whenever we find a better one. Obviously, limitations of the providers can not be compensated, but the common feature subset is big enough to have common parts in the framework. The second principle is about managing complexity and simplifying test writing. To achieve that, we built a VisualDebugger tool to improve productivity. It’s designed for tests to be observed/debugged during their execution. The developer simply puts a breakpoint into the code and starts the test; the tool then launches automatically, connecting to the Node on which the test is running and allowing to follow visually what the test does.

Since the first usage, we’ve improved the framework and the infrastructure a lot, but it is still young. We use it actively for automated tests, currently employing 100-200 automated test scenarios; we’ve applied it to real world load simulation.

Since the first usage, we’ve improved the framework and the infrastructure a lot, but it is still young. We use it actively for automated tests, currently employing 100-200 automated test scenarios; we’ve applied it to real world load simulation.

It is only the beginning of this journey as we are facing new issues, experimenting, and learning. On the one hand, automated testing requires a significant development investment and commitment, but on the other hand, it’s the only way to scale testing efforts that can match the needs of the growing system and organization.